|

Khai Loong Aw I am a Computer Science PhD student at Stanford University. I am currently working on computer vision and neuro-/cognitive science in the NeuroAI Lab. I am very fortunate to be advised by Daniel Yamins. Previously, I worked on improving LLMs, making them more human-like and more predictively accurate models of language processing in the human brain by using NLP techniques. In 2023, I worked with Antoine Bosselut and Martin Schrimpf at EPFL. In 2022, I worked with Mariya Toneva at the MPI for Software Systems. In my free time, I like to draw, rock climb, and cook. When I was young, I also represented Singapore playing chess. |

|

ResearchKhai is a PhD student in the Computer Science Department at Stanford University. He is interested in building visual-cognitive world models capable of tackling the complex tasks that humans perform. By training these systems under developmentally realistic constraints, he aims to discover the mechanisms, structures, and inductive biases that enable human babies to acquire these abilities. Before joining Stanford, he explored ways to train AI language models to align them with language processing mechanisms in the human brain.(see Google Scholar for full list of papers) |

|

|

Taming generative video models for zero-shot optical flow extraction

Seungwoo Kim*, Khai Loong Aw*, Klemen Kotar*, Cristobal Eyzaguirre, Wanhee Lee, Yunong Liu, Jared Watrous, Stefan Stojanov, Juan Carlos Niebles, Jiajun Wu, Daniel Yamins NeurIPS, 2025 arXiv / Website / GitHub We design KL-tracing, a novel method that uses KL divergence of prediction logits for extracting state-of-the-art optical flow from autoregressive video generative models. The key insight lies in our principled vision of using large world models to perform visual tasks (and extract visual intermediates for downstream use), because they capture challenging, long-tailed dynamics better than specialized models trained on limited data. |

|

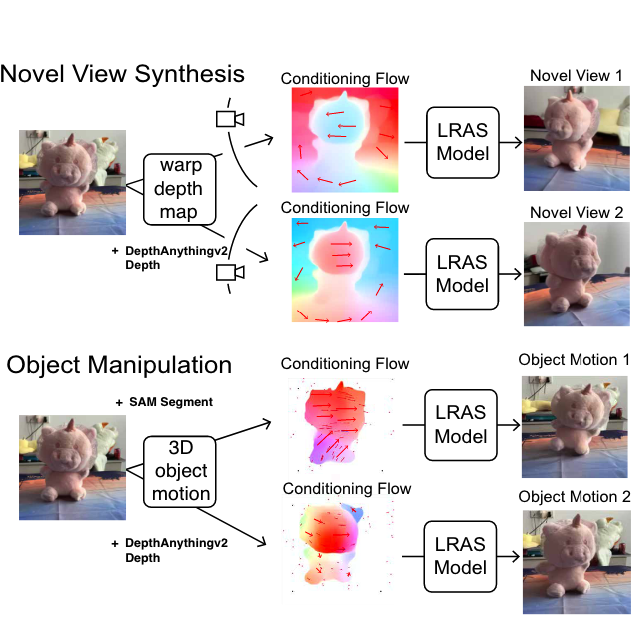

3D Scene Understanding Through Local Random Access Sequence Modeling

Wanhee Lee, Klemen Kotar, Rahul Venkatesh, Jared Watrous, Honglin Chen, Khai Loong Aw, Daniel Yamins 2025 arXiv We propose Local Random Access Sequence (LRAS), an autoregressive generative model architecture. Using optical flow as an intermediate representation, LRAS achieves state-of-the-art novel view synthesis and 3D object manipulation. The broader idea is to unify diverse visual tasks, including 3D scene understanding and generation, in a generalist foundation model. |

|

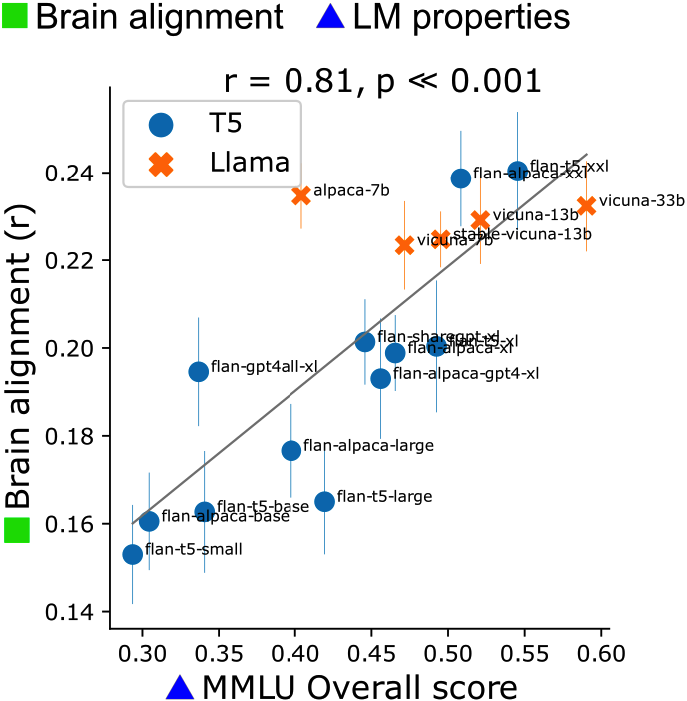

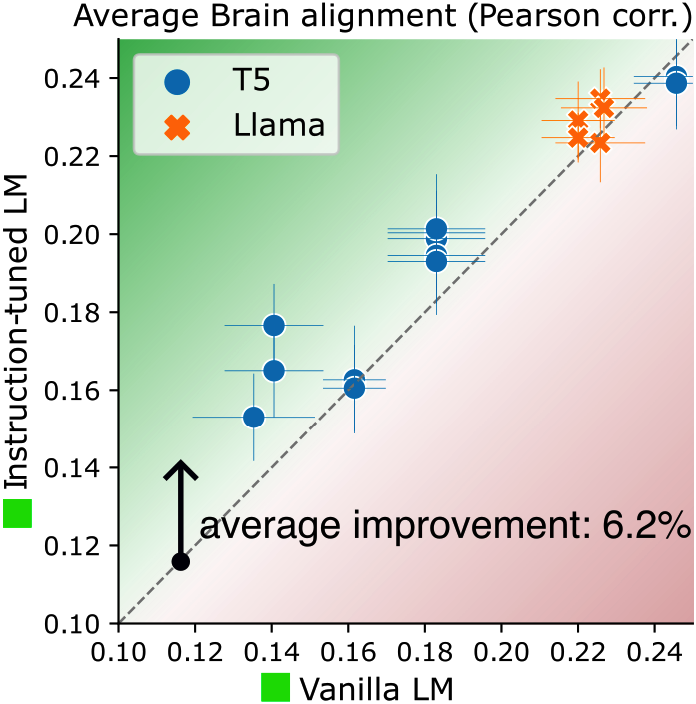

Instruction-tuning Aligns LLMs to the Human Brain

Khai Loong Aw, Syrielle Montariol, Badr AlKhamissi, Martin Schrimpf, Antoine Bosselut COLM, 2024 arXiv Our goal is to build better, more human-like, and more predictively accurate models of language processing in the human brain by using NLP techniques. We show that instruction-tuning improves the alignment of LLMs with human brain activity, with model size and world knowledge playing key roles. |

|

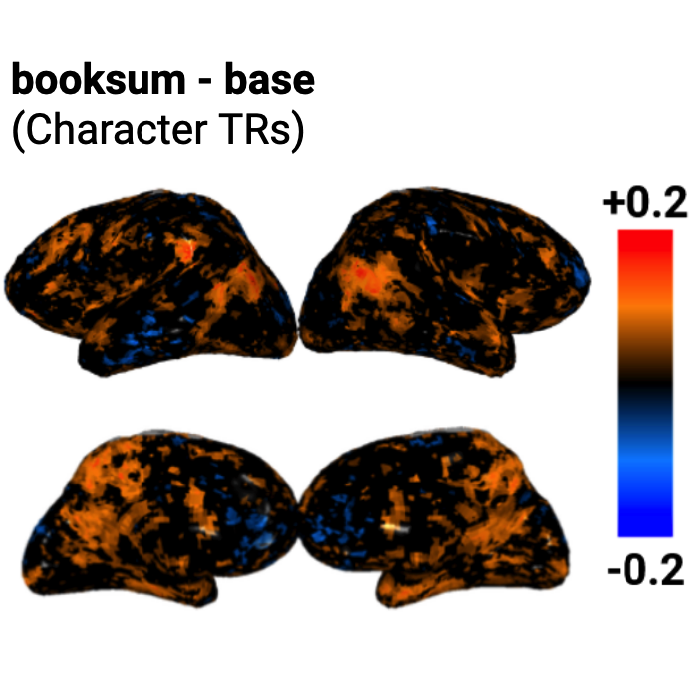

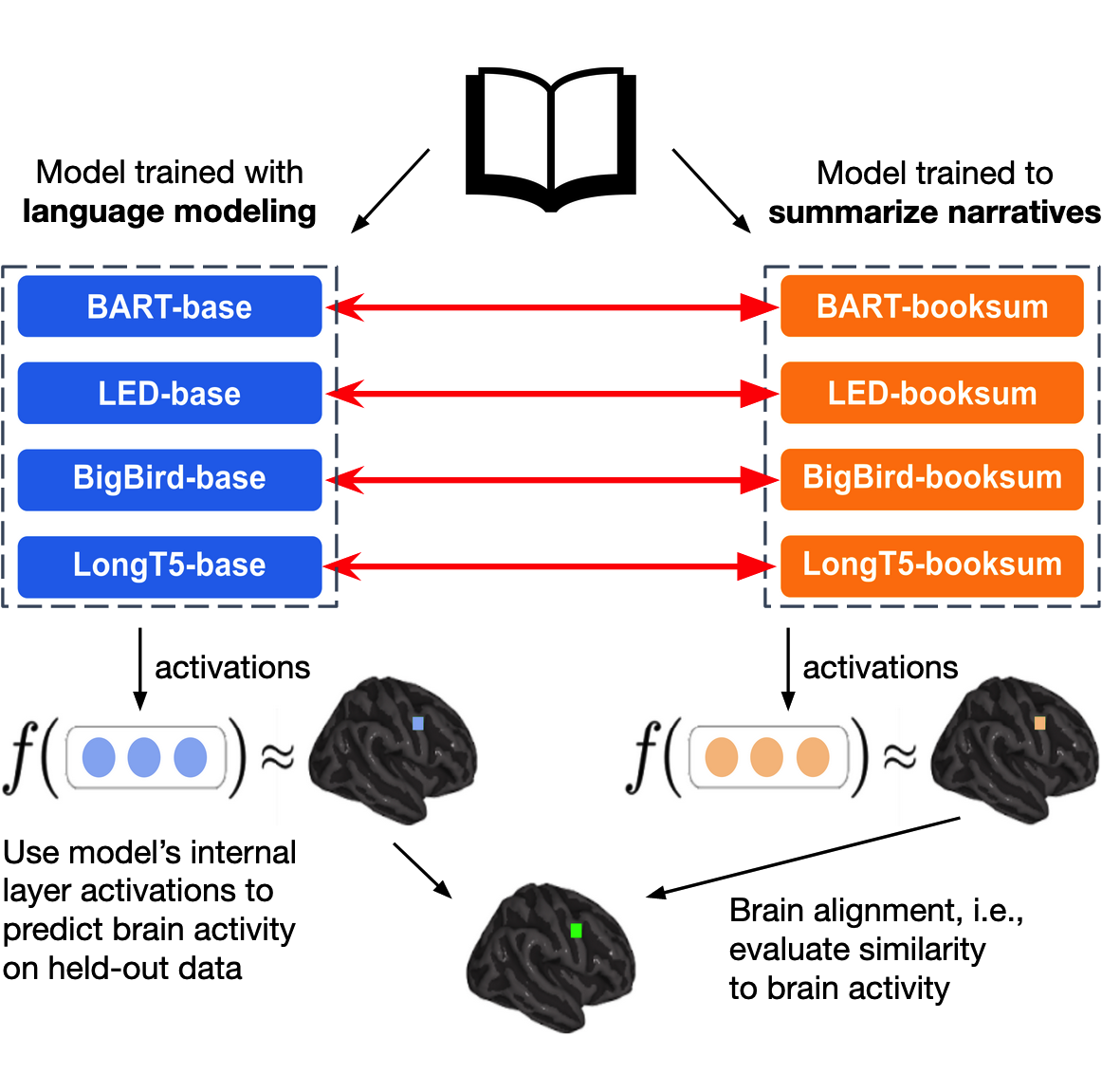

Training language models to summarize narratives improves brain

alignment

Khai Loong Aw, Mariya Toneva ICLR, 2023 (Spotlight) arXiv / GitHub Our goal is to improve LLMs by taking inspiration from language processing mechanisms in the human brain. Our key insight is identifying that humans do not just passively predict the next token, but actively summarize and distill information when reading. We show that training language models to summarize narratives (i.e., deeper understanding of characters, emotions, and relationships) results in richer representations that are more aligned to human brain activity. |

|

Detecting False Alarms from Automatic Static Analysis Tools: How Far are We?

Hong Jin Kang, Khai Loong Aw, David Lo ICSE, 2022 (Distinguished Paper Nominee) arXiv / Poster / Video We investigated how well machine learning techniques can be used to detect bugs in large-scale, real-world software projects. |